AI and the Possibilities for the Legal Profession — and Legal Education

When a new Yale Law School course — Artificial Intelligence, the Legal Profession, and Procedure — convened in the spring 2023 term, it happened to arrive on the heels of the generative-AI hype wave whipped up by the release of ChatGPT.

The timing was fortuitous, according to the course’s instructors — John A. Garver Professor of Jurisprudence William Eskridge Jr. ’78 and visiting lecturers Jeffrey Chivers and Theodore Rostow ’17 — especially since their discussions about creating the class had begun in 2019. Even then, Eskridge sensed a pent-up demand among Law School students for a class exploring the implications of artificial intelligence tools on law and the legal profession.

“AI has already changed the practice of law,” he said. “It’s changing the structure of the legal profession, the procedures followed by the courts, and forms of adjudication. Our students understand they’re going to be living with this, so part of our agenda is to get them thinking intellectually about the intersection among AI, the legal profession, and rules of procedure.”

Elena Sokoloski ’25 enrolled in the class because she’d begun to wonder how AI could positively impact public interest law, the area she’s interested in pursuing in her career. She’d volunteered for legal aid organizations before law school, and was discouraged by the inefficiencies that ultimately limited access to legal services.

“I noticed a lot of places where the work that we were doing was really ripe for automation,” she said. “But instead, attorneys and paralegals were spending a lot of time doing it. That got me thinking about where tech might be able to increase capacity, especially to help low-income people.”

“It’s been a very dynamic class, paralleling in many ways the idea of artificial intelligence itself.”

—Professor William Eskridge Jr. ’78

Early AI tools are already being utilized across the legal profession to increase efficiency — but this is just the beginning. Chivers, who, together with Rostow, founded a law firm as well as a tech company to focus on AI-assisted litigation, said that AI first was used in litigation over a decade ago in “e-discovery,” where an AI system can learn to predict tagging patterns. As this has become increasingly prevalent, AI tools have also been developed to assist with legal research, and, most recently, even the generation of legal writing — a realm where many lawyers expected that they would maintain clear superiority over machines for the foreseeable future.

But they may not: Chivers said that the language models that have been released over the past few months represent a remarkably substantial advancement in English-language capability by a machine-learning system. That should cause the industry to “adjust their timeframes as to when certain things can be accomplished by artificial intelligence in the legal domain,” he said.

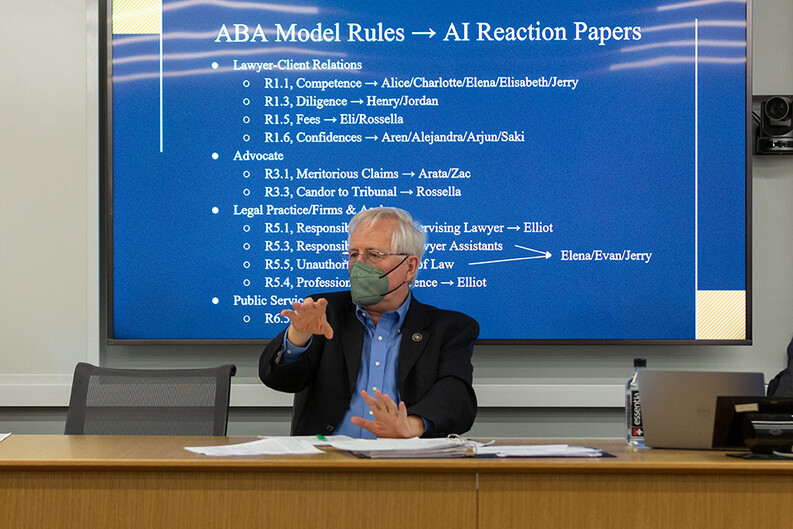

The course focuses on litigation practice; it doesn’t delve into the capability of AI systems to, for example, write and review contracts or perform due diligence. The instructors are particularly interested in how legal systems and litigation process will absorb the impact of artificial intelligence — and how they will evolve as a result. Eskridge brings to these high-level discussions his own expertise in various strands of legal theory, especially his interests in statutory interpretation and ethics.

The advancing technologies raise important ethics issues, said Eskridge, and “ours is a course where ethics issues have come up constantly.”

At the same time, the instructors believed it was important to ground the students at the outset with an in-depth introduction to the technical inner-workings of artificial intelligence, which was the focus of the first three class meetings. This foundation of technical proficiency paid off, Chivers said, in terms of the “level of technical attunement students are displaying in their response papers.”

Together, the instructors made an effort to fill the class with students representing varying amounts of technical expertise and worked to “get all of the students up to a baseline of technical understanding so that they’re able to share ideas in a way that isn’t completely removed from the technical realities of today,” Chivers said.

Sokoloski, who entered the class with relatively little technical knowledge, said going deep into the mechanics behind AI language models has helped her better grapple with the thorny questions that AI raises for the legal profession.

“This understanding helped me more deeply conceptualize not just the practical challenges of using a tool that’s sometimes going to spit out hallucinations,” she said, “but also the ethical questions that go to the heart of what it means to practice law.”

Many of the class discussions about how AI will transform the legal professions have identified the risks that accompany these technologies’ use while also expressing optimism about how these tools can improve the legal system, said Rossella Gabriele ’25.

“How can we use AI tools to increase access to courts; to increase access to chatbots that can give you advice about whether something is worth taking to court, or how much you should demand for a settlement; and to help people advocate for themselves?” said Gabriele.

For his part, Eskridge said he’s heartened by how actively students have considered the questions raised within the class — and how eager they’ve been to collaboratively steer its direction.

“We told the students at the beginning: ‘This is an experimental course,’” Eskridge said, adding that the area is so new that it lacks an established literature. In fact, the instructors have found themselves updating the syllabus nearly every week in response to new tech developments. “And the students have raised things that we had not thought about,” Eskridge added. “It’s been a very dynamic class, paralleling in many ways the idea of artificial intelligence itself.”